detectnet_v2

Object Detection using TAO DetectNet_v2

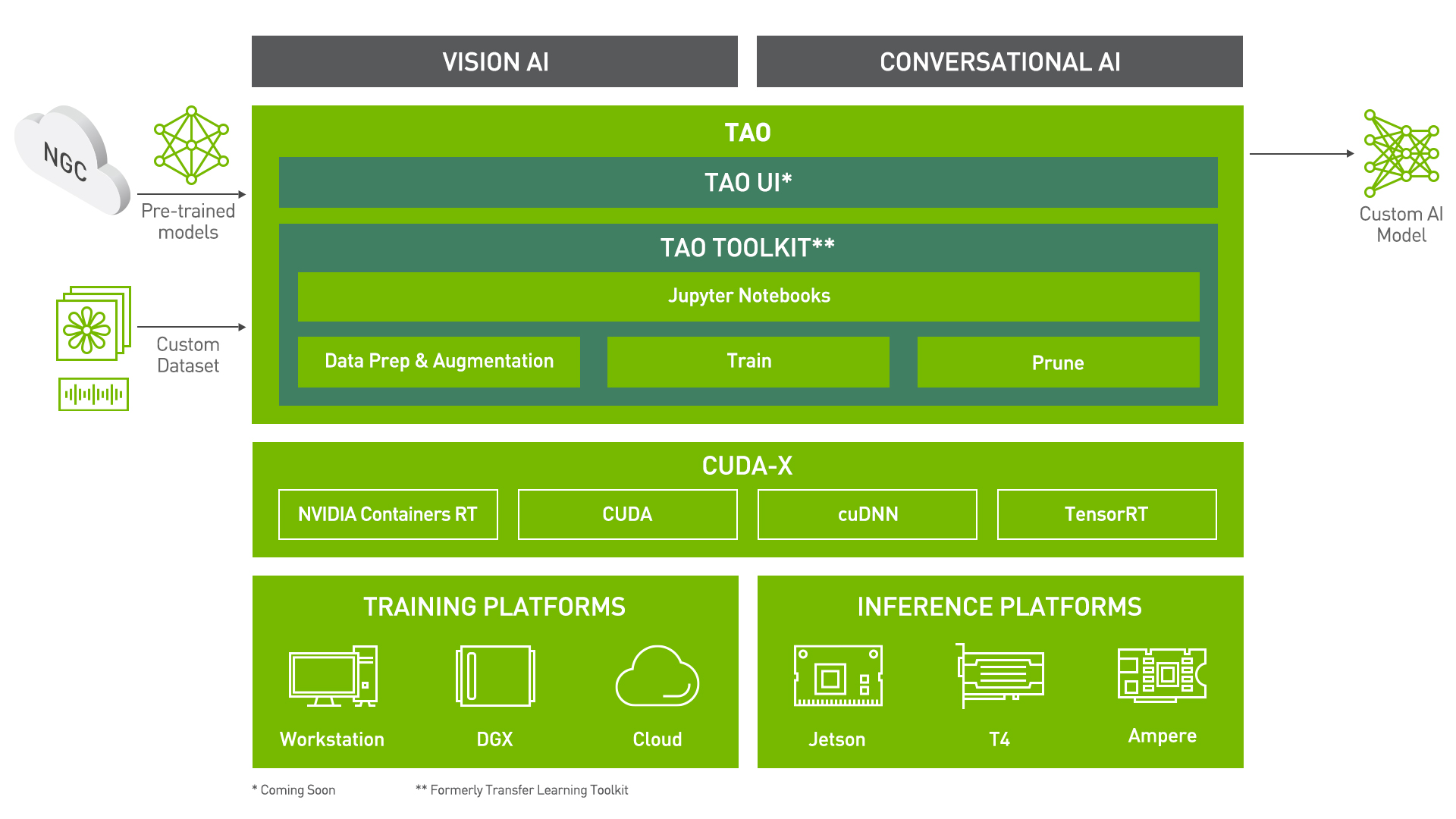

Transfer learning is the process of transferring learned features from one application to another. It is a commonly used training technique where you use a model trained on one task and re-train to use it on a different task.

Train Adapt Optimize (TAO) Toolkit is a simple and easy-to-use Python based AI toolkit for taking purpose-built AI models and customizing them with users' own data.

Learning Objectives

In this notebook, you will learn how to leverage the simplicity and convenience of TAO to:

- Take a pretrained resnet18 model and train a ResNet-18 DetectNet_v2 model on the KITTI dataset

- Prune the trained detectnet_v2 model

- Retrain the pruned model to recover lost accuracy

- Export the pruned model

- Quantize the pruned model using QAT

- Run Inference on the trained model

- Export the pruned, quantized and retrained model to a .etlt file for deployment to DeepStream

- Run inference on the exported. etlt model to verify deployment using TensorRT

Table of Contents

This notebook shows an example usecase of Object Detection using DetectNet_v2 in the Train Adapt Optimize (TAO) Toolkit.

- Set up env variables and map drives

- Install the TAO Launcher

- Prepare dataset and pre-trained model

- Provide training specification

- Run TAO training

- Evaluate trained models

- Prune trained models

- Retrain pruned models

- Evaluate retrained model

- Visualize inferences

- Model Export

- Verify Deployed Model

- QAT workflow

0. Set up env variables and map drives

When using the purpose-built pretrained models from NGC, please make sure to set the $KEY environment variable to the key as mentioned in the model overview. Failing to do so, can lead to errors when trying to load them as pretrained models.

The following notebook requires the user to set an env variable called the $LOCAL_PROJECT_DIR as the path to the users workspace. Please note that the dataset to run this notebook is expected to reside in the $LOCAL_PROJECT_DIR/data, while the TAO experiment generated collaterals will be output to $LOCAL_PROJECT_DIR/detectnet_v2. More information on how to set up the dataset and the supported steps in the TAO workflow are provided in the subsequent cells.

Note: Please make sure to remove any stray artifacts/files from the $USER_EXPERIMENT_DIR or $DATA_DOWNLOAD_DIR paths as mentioned below, that may have been generated from previous experiments. Having checkpoint files etc may interfere with creating a training graph for a new experiment.

Note: This notebook currently is by default set up to run training using 1 GPU. To use more GPU's please update the env variable $NUM_GPUS accordingly

# Setting up env variables for cleaner command line commands.

import os

%env KEY=tlt_encode

%env NUM_GPUS=1

%env USER_EXPERIMENT_DIR=/workspace/tao-experiments/detectnet_v2

%env DATA_DOWNLOAD_DIR=/workspace/tao-experiments/data

# Set this path if you don't run the notebook from the samples directory.

# %env NOTEBOOK_ROOT=~/tao-samples/detectnet_v2

# Please define this local project directory that needs to be mapped to the TAO docker session.

# The dataset expected to be present in $LOCAL_PROJECT_DIR/data, while the results for the steps

# in this notebook will be stored at $LOCAL_PROJECT_DIR/detectnet_v2

# !PLEASE MAKE SURE TO UPDATE THIS PATH!.

os.environ["LOCAL_PROJECT_DIR"] = FIXME

os.environ["LOCAL_DATA_DIR"] = os.path.join(

os.getenv("LOCAL_PROJECT_DIR", os.getcwd()),

"data"

)

os.environ["LOCAL_EXPERIMENT_DIR"] = os.path.join(

os.getenv("LOCAL_PROJECT_DIR", os.getcwd()),

"detectnet_v2"

)

# The sample spec files are present in the same path as the downloaded samples.

os.environ["LOCAL_SPECS_DIR"] = os.path.join(

os.getenv("NOTEBOOK_ROOT", os.getcwd()),

"specs"

)

%env SPECS_DIR=/workspace/tao-experiments/detectnet_v2/specs

# Showing list of specification files.

!ls -rlt $LOCAL_SPECS_DIRThe cell below maps the project directory on your local host to a workspace directory in the TAO docker instance, so that the data and the results are mapped from in and out of the docker. For more information please refer to the launcher instance in the user guide.

When running this cell on AWS, update the drive_map entry with the dictionary defined below, so that you don't have permission issues when writing data into folders created by the TAO docker.

drive_map = {

"Mounts": [

# Mapping the data directory

{

"source": os.environ["LOCAL_PROJECT_DIR"],

"destination": "/workspace/tao-experiments"

},

# Mapping the specs directory.

{

"source": os.environ["LOCAL_SPECS_DIR"],

"destination": os.environ["SPECS_DIR"]

},

],

"DockerOptions": {

"user": "{}:{}".format(os.getuid(), os.getgid())

}

}

# Mapping up the local directories to the TAO docker.

import json

mounts_file = os.path.expanduser("~/.tao_mounts.json")

# Define the dictionary with the mapped drives

drive_map = {

"Mounts": [

# Mapping the data directory

{

"source": os.environ["LOCAL_PROJECT_DIR"],

"destination": "/workspace/tao-experiments"

},

# Mapping the specs directory.

{

"source": os.environ["LOCAL_SPECS_DIR"],

"destination": os.environ["SPECS_DIR"]

},

]

}

# Writing the mounts file.

with open(mounts_file, "w") as mfile:

json.dump(drive_map, mfile, indent=4)!cat ~/.tao_mounts.json1. Install the TAO launcher

The TAO launcher is a python package distributed as a python wheel listed in the nvidia-pyindex python index. You may install the launcher by executing the following cell.

Please note that TAO Toolkit recommends users to run the TAO launcher in a virtual env with python 3.6.9. You may follow the instruction in this page to set up a python virtual env using the virtualenv and virtualenvwrapper packages. Once you have setup virtualenvwrapper, please set the version of python to be used in the virtual env by using the VIRTUALENVWRAPPER_PYTHON variable. You may do so by running

export VIRTUALENVWRAPPER_PYTHON=/path/to/bin/python3.x

where x >= 6 and <= 8

We recommend performing this step first and then launching the notebook from the virtual environment. In addition to installing TAO python package, please make sure of the following software requirements:

- python >=3.6.9 < 3.8.x

- docker-ce > 19.03.5

- docker-API 1.40

- nvidia-container-toolkit > 1.3.0-1

- nvidia-container-runtime > 3.4.0-1

- nvidia-docker2 > 2.5.0-1

- nvidia-driver > 455+

Once you have installed the pre-requisites, please log in to the docker registry nvcr.io by following the command below

docker login nvcr.io

You will be triggered to enter a username and password. The username is $oauthtoken and the password is the API key generated from ngc.nvidia.com. Please follow the instructions in the NGC setup guide to generate your own API key.

# SKIP this step IF you have already installed the TAO launcher wheel.

!pip3 install nvidia-pyindex

!pip3 install nvidia-tao# View the versions of the TAO launcher

!tao infoWe will be using the kitti object detection dataset for this example. To find more details, please visit http://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=2d. Please download both, the left color images of the object dataset from here and, the training labels for the object dataset from here, and place the zip files in $LOCAL_DATA_DIR

The data will then be extracted to have

- training images in

$LOCAL_DATA_DIR/training/image_2 - training labels in

$LOCAL_DATA_DIR/training/label_2 - testing images in

$LOCAL_DATA_DIR/testing/image_2

You may use this notebook with your own dataset as well. To use this example with your own dataset, please follow the same directory structure as mentioned below.

Note: There are no labels for the testing images, therefore we use it just to visualize inferences for the trained model.

A. Download the dataset

Once you have gotten the download links in your email, please populate them in place of the KITTI_IMAGES_DOWNLOAD_URL and the KITTI_LABELS_DOWNLOAD_URL. This next cell, will download the data and place in $LOCAL_DATA_DIR

import os

!mkdir -p $LOCAL_DATA_DIR

os.environ["URL_IMAGES"]=KITTI_IMAGES_DOWNLOAD_URL

!if [ ! -f $LOCAL_DATA_DIR/data_object_image_2.zip ]; then wget $URL_IMAGES -O $LOCAL_DATA_DIR/data_object_image_2.zip; else echo "image archive already downloaded"; fi

os.environ["URL_LABELS"]=KITTI_LABELS_DOWNLOAD_URL

!if [ ! -f $LOCAL_DATA_DIR/data_object_label_2.zip ]; then wget $URL_LABELS -O $LOCAL_DATA_DIR/data_object_label_2.zip; else \ echo "label archive already downloaded"; fi # Check the dataset is present

!if [ ! -f $LOCAL_DATA_DIR/data_object_image_2.zip ]; then echo 'Image zip file not found, please download.'; else echo 'Found Image zip file.';fi

!if [ ! -f $LOCAL_DATA_DIR/data_object_label_2.zip ]; then echo 'Label zip file not found, please download.'; else echo 'Found Labels zip file.';fi# This may take a while: verify integrity of zip files

!sha256sum $LOCAL_DATA_DIR/data_object_image_2.zip | cut -d ' ' -f 1 | grep -xq '^351c5a2aa0cd9238b50174a3a62b846bc5855da256b82a196431d60ff8d43617$' ; \

if test $? -eq 0; then echo "images OK"; else echo "images corrupt, redownload!" && rm -f $LOCAL_DATA_DIR/data_object_image_2.zip; fi

!sha256sum $LOCAL_DATA_DIR/data_object_label_2.zip | cut -d ' ' -f 1 | grep -xq '^4efc76220d867e1c31bb980bbf8cbc02599f02a9cb4350effa98dbb04aaed880$' ; \

if test $? -eq 0; then echo "labels OK"; else echo "labels corrupt, redownload!" && rm -f $LOCAL_DATA_DIR/data_object_label_2.zip; fi # unpack downloaded datasets to $DATA_DOWNLOAD_DIR.

# The training images will be under $DATA_DOWNLOAD_DIR/training/image_2 and

# labels will be under $DATA_DOWNLOAD_DIR/training/label_2.

# The testing images will be under $DATA_DOWNLOAD_DIR/testing/image_2.

!unzip -u $LOCAL_DATA_DIR/data_object_image_2.zip -d $LOCAL_DATA_DIR

!unzip -u $LOCAL_DATA_DIR/data_object_label_2.zip -d $LOCAL_DATA_DIR# verify

import os

DATA_DIR = os.environ.get('LOCAL_DATA_DIR')

num_training_images = len(os.listdir(os.path.join(DATA_DIR, "training/image_2")))

num_training_labels = len(os.listdir(os.path.join(DATA_DIR, "training/label_2")))

num_testing_images = len(os.listdir(os.path.join(DATA_DIR, "testing/image_2")))

print("Number of images in the train/val set. {}".format(num_training_images))

print("Number of labels in the train/val set. {}".format(num_training_labels))

print("Number of images in the test set. {}".format(num_testing_images))# Sample kitti label.

!cat $LOCAL_DATA_DIR/training/label_2/000110.txtC. Prepare tf records from kitti format dataset

- Update the tfrecords spec file to take in your kitti format dataset

- Create the tfrecords using the detectnet_v2 dataset_convert

Note: TfRecords only need to be generated once.

print("TFrecords conversion spec file for kitti training")

!cat $LOCAL_SPECS_DIR/detectnet_v2_tfrecords_kitti_trainval.txt# Creating a new directory for the output tfrecords dump.

print("Converting Tfrecords for kitti trainval dataset")

!mkdir -p $LOCAL_DATA_DIR/tfrecords && rm -rf $LOCAL_DATA_DIR/tfrecords/*

!tao detectnet_v2 dataset_convert \

-d $SPECS_DIR/detectnet_v2_tfrecords_kitti_trainval.txt \

-o $DATA_DOWNLOAD_DIR/tfrecords/kitti_trainval/kitti_trainval!ls -rlt $LOCAL_DATA_DIR/tfrecords/kitti_trainval/D. Download pre-trained model

Download the correct pretrained model from the NGC model registry for your experiment. Please note that for DetectNet_v2, the input is expected to be 0-1 normalized with input channels in RGB order. Therefore, for optimum results please download model templates from nvidia/tao/pretrained_detectnet_v2. The templates are now organized as version strings. For example, to download a resnet18 model suitable for detectnet please resolve to the ngc object shown as nvidia/tao/pretrained_detectnet_v2:resnet18.

All other models are in BGR order expect input preprocessing with mean subtraction and input channels. Using them as pretrained weights may result in suboptimal performance.

You may also use this notebook with the following purpose-built pretrained models

# Installing NGC CLI on the local machine.

## Download and install

%env CLI=ngccli_cat_linux.zip

!mkdir -p $LOCAL_PROJECT_DIR/ngccli

# Remove any previously existing CLI installations

!rm -rf $LOCAL_PROJECT_DIR/ngccli/*

!wget "https://ngc.nvidia.com/downloads/$CLI" -P $LOCAL_PROJECT_DIR/ngccli

!unzip -u "$LOCAL_PROJECT_DIR/ngccli/$CLI" -d $LOCAL_PROJECT_DIR/ngccli/

!rm $LOCAL_PROJECT_DIR/ngccli/*.zip

os.environ["PATH"]="{}/ngccli:{}".format(os.getenv("LOCAL_PROJECT_DIR", ""), os.getenv("PATH", ""))# List models available in the model registry.

!ngc registry model list nvidia/tao/pretrained_detectnet_v2:*# Create the target destination to download the model.

!mkdir -p $LOCAL_EXPERIMENT_DIR/pretrained_resnet18/# Download the pretrained model from NGC

!ngc registry model download-version nvidia/tao/pretrained_detectnet_v2:resnet18 \

--dest $LOCAL_EXPERIMENT_DIR/pretrained_resnet18!ls -rlt $LOCAL_EXPERIMENT_DIR/pretrained_resnet18/pretrained_detectnet_v2_vresnet183. Provide training specification

- Tfrecords for the train datasets

- To use the newly generated tfrecords, update the dataset_config parameter in the spec file at

$SPECS_DIR/detectnet_v2_train_resnet18_kitti.txt - Update the fold number to use for evaluation. In case of random data split, please use fold

0only - For sequence-wise split, you may use any fold generated from the dataset convert tool

- To use the newly generated tfrecords, update the dataset_config parameter in the spec file at

- Pre-trained models

- Augmentation parameters for on the fly data augmentation

- Other training (hyper-)parameters such as batch size, number of epochs, learning rate etc.

!cat $LOCAL_SPECS_DIR/detectnet_v2_train_resnet18_kitti.txt4. Run TAO training

- Provide the sample spec file and the output directory location for models

Note: The training may take hours to complete. Also, the remaining notebook, assumes that the training was done in single-GPU mode. When run in multi-GPU mode, please expect to update the pruning and inference steps with new pruning thresholds and updated parameters in the clusterfile.json accordingly for optimum performance.

Detectnet_v2 now supports restart from checkpoint. In case the training job is killed prematurely, you may resume training from the closest checkpoint by simply re-running the same command line. Please do make sure to use the same number of GPUs when restarting the training.

When running the training with NUM_GPUs>1, you may need to modify the batch_size_per_gpu and learning_rate to get similar mAP as a 1GPU training run. In most cases, scaling down the batch-size by a factor of NUM_GPU's or scaling up the learning rate by a factor of NUM_GPU's would be a good place to start.

!tao detectnet_v2 train -e $SPECS_DIR/detectnet_v2_train_resnet18_kitti.txt \

-r $USER_EXPERIMENT_DIR/experiment_dir_unpruned \

-k $KEY \

-n resnet18_detector \

--gpus $NUM_GPUSprint('Model for each epoch:')

print('---------------------')

!ls -lh $LOCAL_EXPERIMENT_DIR/experiment_dir_unpruned/weights!tao detectnet_v2 evaluate -e $SPECS_DIR/detectnet_v2_train_resnet18_kitti.txt\

-m $USER_EXPERIMENT_DIR/experiment_dir_unpruned/weights/resnet18_detector.tlt \

-k $KEY6. Prune the trained model

- Specify pre-trained model

- Equalization criterion (

Applicable for resnets and mobilenets) - Threshold for pruning.

- A key to save and load the model

- Output directory to store the model

Usually, you just need to adjust -pth (threshold) for accuracy and model size trade off. Higher pth gives you smaller model (and thus higher inference speed) but worse accuracy. The threshold to use is dependent on the dataset. A pth value 5.2e-6 is just a start point. If the retrain accuracy is good, you can increase this value to get smaller models. Otherwise, lower this value to get better accuracy.

For some internal studies, we have noticed that a pth value of 0.01 is a good starting point for detectnet_v2 models.

# Create an output directory if it doesn't exist.

!mkdir -p $LOCAL_EXPERIMENT_DIR/experiment_dir_pruned!tao detectnet_v2 prune \

-m $USER_EXPERIMENT_DIR/experiment_dir_unpruned/weights/resnet18_detector.tlt \

-o $USER_EXPERIMENT_DIR/experiment_dir_pruned/resnet18_nopool_bn_detectnet_v2_pruned.tlt \

-eq union \

-pth 0.0000052 \

-k $KEY!ls -rlt $LOCAL_EXPERIMENT_DIR/experiment_dir_pruned/7. Retrain the pruned model

- Model needs to be re-trained to bring back accuracy after pruning

- Specify re-training specification with pretrained weights as pruned model.

Note: For retraining, please set the load_graph option to true in the model_config to load the pruned model graph. Also, if after retraining, the model shows some decrease in mAP, it could be that the originally trained model was pruned a little too much. Please try reducing the pruning threshold (thereby reducing the pruning ratio) and use the new model to retrain.

Note: DetectNet_v2 now supports Quantization Aware Training, to help with optmizing the model. By default, the training in the cell below doesn't run the model with QAT enabled. For information on training a model with QAT, please refer to the cells under section 11

# Printing the retrain experiment file.

# Note: We have updated the experiment file to include the

# newly pruned model as a pretrained weights and, the

# load_graph option is set to true

!cat $LOCAL_SPECS_DIR/detectnet_v2_retrain_resnet18_kitti.txt# Retraining using the pruned model as pretrained weights

!tao detectnet_v2 train -e $SPECS_DIR/detectnet_v2_retrain_resnet18_kitti.txt \

-r $USER_EXPERIMENT_DIR/experiment_dir_retrain \

-k $KEY \

-n resnet18_detector_pruned \

--gpus $NUM_GPUS# Listing the newly retrained model.

!ls -rlt $LOCAL_EXPERIMENT_DIR/experiment_dir_retrain/weightsThis section evaluates the pruned and retrained model, using the evaluate command.

!tao detectnet_v2 evaluate -e $SPECS_DIR/detectnet_v2_retrain_resnet18_kitti.txt \

-m $USER_EXPERIMENT_DIR/experiment_dir_retrain/weights/resnet18_detector_pruned.tlt \

-k $KEY9. Visualize inferences

In this section, we run the inference tool to generate inferences on the trained models. To render bboxes from more classes, please edit the spec file detectnet_v2_inference_kitti_tlt.txt to include all the classes you would like to visualize and edit the rest of the file accordingly.

# Running inference for detection on n images

!tao detectnet_v2 inference -e $SPECS_DIR/detectnet_v2_inference_kitti_tlt.txt \

-o $USER_EXPERIMENT_DIR/tlt_infer_testing \

-i $DATA_DOWNLOAD_DIR/testing/image_2 \

-k $KEYThe inference tool produces two outputs.

- Overlain images in

$USER_EXPERIMENT_DIR/tlt_infer_testing/images_annotated - Frame by frame bbox labels in kitti format located in

$USER_EXPERIMENT_DIR/tlt_infer_testing/labels

Note: To run inferences for a single image, simply replace the path to the -i flag in inference command with the path to the image.

# Simple grid visualizer

!pip3 install matplotlib==3.3.3

%matplotlib inline

import matplotlib.pyplot as plt

import os

from math import ceil

valid_image_ext = ['.jpg', '.png', '.jpeg', '.ppm']

def visualize_images(image_dir, num_cols=4, num_images=10):

output_path = os.path.join(os.environ['LOCAL_EXPERIMENT_DIR'], image_dir)

num_rows = int(ceil(float(num_images) / float(num_cols)))

f, axarr = plt.subplots(num_rows, num_cols, figsize=[80,30])

f.tight_layout()

a = [os.path.join(output_path, image) for image in os.listdir(output_path)

if os.path.splitext(image)[1].lower() in valid_image_ext]

for idx, img_path in enumerate(a[:num_images]):

col_id = idx % num_cols

row_id = idx // num_cols

img = plt.imread(img_path)

axarr[row_id, col_id].imshow(img) # Visualizing the first 12 images.

OUTPUT_PATH = 'tlt_infer_testing/images_annotated' # relative path from $USER_EXPERIMENT_DIR.

COLS = 4 # number of columns in the visualizer grid.

IMAGES = 12 # number of images to visualize.

visualize_images(OUTPUT_PATH, num_cols=COLS, num_images=IMAGES)!mkdir -p $LOCAL_EXPERIMENT_DIR/experiment_dir_final

# Removing a pre-existing copy of the etlt if there has been any.

import os

output_file=os.path.join(os.environ['LOCAL_EXPERIMENT_DIR'],

"experiment_dir_final/resnet18_detector.etlt")

if os.path.exists(output_file):

os.system("rm {}".format(output_file))

!tao detectnet_v2 export \

-m $USER_EXPERIMENT_DIR/experiment_dir_retrain/weights/resnet18_detector_pruned.tlt \

-o $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector.etlt \

-k $KEYprint('Exported model:')

print('------------')

!ls -lh $LOCAL_EXPERIMENT_DIR/experiment_dir_finalA. Int8 Optimization

DetectNet_v2 model supports int8 inference mode in TensorRT. In order to use int8 mode, we must calibrate the model to run 8-bit inferences -

- Generate calibration tensorfile from the training data using detectnet_v2 calibration_tensorfile

- Use tao export to generate int8 calibration table.

Note: For this example, we generate a calibration tensorfile containing 10 batches of training data. Ideally, it is best to use at least 10-20% of the training data to do so. The more data provided during calibration, the closer int8 inferences are to fp32 inferences.

Note: If the model was trained with QAT nodes available, please refrain from using the post training int8 optimization as mentioned below. Please export the model in int8 mode (using the arg --data_type int8) with just the path to the calibration cache file (using the argument --cal_cache_file)

!tao detectnet_v2 calibration_tensorfile -e $SPECS_DIR/detectnet_v2_retrain_resnet18_kitti.txt \

-m 10 \

-o $USER_EXPERIMENT_DIR/experiment_dir_final/calibration.tensor!rm -rf $LOCAL_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector.etlt

!rm -rf $LOCAL_EXPERIMENT_DIR/experiment_dir_final/calibration.bin

!tao detectnet_v2 export \

-m $USER_EXPERIMENT_DIR/experiment_dir_retrain/weights/resnet18_detector_pruned.tlt \

-o $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector.etlt \

-k $KEY \

--cal_data_file $USER_EXPERIMENT_DIR/experiment_dir_final/calibration.tensor \

--data_type int8 \

--batches 10 \

--batch_size 4 \

--max_batch_size 4\

--engine_file $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector.trt.int8 \

--cal_cache_file $USER_EXPERIMENT_DIR/experiment_dir_final/calibration.bin \

--verboseB. Generate TensorRT engine

Verify engine generation using the tao-converter utility included with the docker.

The tao-converter produces optimized tensorrt engines for the platform that it resides on. Therefore, to get maximum performance, please instantiate this docker and execute the tao-converter command, with the exported .etlt file and calibration cache (for int8 mode) on your target device. The tao-converter utility included in this docker only works for x86 devices, with discrete NVIDIA GPU's.

For the jetson devices, please download the tao-converter for jetson from the dev zone link here.

If you choose to integrate your model into deepstream directly, you may do so by simply copying the exported .etlt file along with the calibration cache to the target device and updating the spec file that configures the gst-nvinfer element to point to this newly exported model. Usually this file is called config_infer_primary.txt for detection models and config_infer_secondary_*.txt for classification models.

!tao converter $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector.etlt \

-k $KEY \

-c $USER_EXPERIMENT_DIR/experiment_dir_final/calibration.bin \

-o output_cov/Sigmoid,output_bbox/BiasAdd \

-d 3,384,1248 \

-i nchw \

-m 64 \

-t int8 \

-e $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector.trt \

-b 411. Verify Deployed Model

Verify the exported model by visualizing inferences on TensorRT.

In addition to running inference on a .tlt model in step 9, the inference tool is also capable of consuming the converted TensorRT engine from step 10.B.

*If after int-8 calibration the accuracy of the int-8 inferences seem to degrade, it could be because the there wasn't enough data in the calibration tensorfile used to calibrate thee model or, the training data is not entirely representative of your test images, and the calibration maybe incorrect. Therefore, you may either regenerate the calibration tensorfile with more batches of the training data and recalibrate the model, or calibrate the model on a few images from the test set. This may be done using --cal_image_dir flag in the export tool. For more information, please follow the instructions in the USER GUIDE.

!tao detectnet_v2 inference -e $SPECS_DIR/detectnet_v2_inference_kitti_etlt.txt \

-o $USER_EXPERIMENT_DIR/etlt_infer_testing \

-i $DATA_DOWNLOAD_DIR/testing/image_2 \

-k $KEY# visualize the first 12 inferenced images.

OUTPUT_PATH = 'etlt_infer_testing/images_annotated' # relative path from $USER_EXPERIMENT_DIR.

COLS = 4 # number of columns in the visualizer grid.

IMAGES = 12 # number of images to visualize.

visualize_images(OUTPUT_PATH, num_cols=COLS, num_images=IMAGES)11. QAT workflow

This section delves into the newly enabled Quantization Aware Training feature with DetectNet_v2. The workflow defined below converts a pruned model from section 5 to enable QAT and retrain this model to while accounting the noise introduced due to quantization in the forward pass.

A. Convert pruned model to QAT and retrain

All detectnet models, unpruned and pruned models can be converted to QAT models by setting the enable_qat parameter in the training_config component of the spec file to true.

# Printing the retrain experiment file.

# Note: We have updated the experiment file to convert the

# pretrained model to qat mode by setting the enable_qat

# parameter.

!cat $LOCAL_SPECS_DIR/detectnet_v2_retrain_resnet18_kitti_qat.txt!tao detectnet_v2 train -e $SPECS_DIR/detectnet_v2_retrain_resnet18_kitti_qat.txt \

-r $USER_EXPERIMENT_DIR/experiment_dir_retrain_qat \

-k $KEY \

-n resnet18_detector_pruned_qat \

--gpus $NUM_GPUS!ls -rlt $LOCAL_EXPERIMENT_DIR/experiment_dir_retrain_qat/weightsB. Evaluate QAT converted model

This section evaluates a QAT enabled pruned retrained model. The mAP of this model should be comparable to that of the pruned retrained model without QAT. However, due to quantization, it is possible sometimes to see a drop in the mAP value for certain datasets.

!tao detectnet_v2 evaluate -e $SPECS_DIR/detectnet_v2_retrain_resnet18_kitti_qat.txt \

-m $USER_EXPERIMENT_DIR/experiment_dir_retrain_qat/weights/resnet18_detector_pruned_qat.tlt \

-k $KEY \

-f tltC. Export QAT trained model to int8

Export a QAT trained model to TensorRT parsable model. This command generates an .etlt file from the trained model and the serializes corresponding int8 scales as a TRT readable calibration cache file.

!rm -rf $LOCAL_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector_qat.etlt

!rm -rf $LOCAL_EXPERIMENT_DIR/experiment_dir_final/calibration_qat.bin

!tao detectnet_v2 export \

-m $USER_EXPERIMENT_DIR/experiment_dir_retrain_qat/weights/resnet18_detector_pruned_qat.tlt \

-o $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector_qat.etlt \

-k $KEY \

--data_type int8 \

--batch_size 64 \

--max_batch_size 64\

--engine_file $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector_qat.trt.int8 \

--cal_cache_file $USER_EXPERIMENT_DIR/experiment_dir_final/calibration_qat.bin \

--verboseD. Evaluate a QAT trained model using the exported TensorRT engine

This section evaluates a QAT enabled pruned retrained model using the TensorRT int8 engine that was exported in Section C. Please note that there maybe a slight difference (~0.1-0.5%) in the mAP from Section B, oweing to some differences in the implementation of quantization in TensorRT.

Note: The TensorRT evaluator might be slightly slower than the TAO evaluator here, because the evaluation dataloader is pinned to the CPU to avoid any clashes between TensorRT and TAO instances in the GPU. Please note that this tool was not intended and has not been developed for profiling the model. It is just a means to qualitatively analyse the model.

Please use native TensorRT or DeepStream for the most optimized inferences.

!tao detectnet_v2 evaluate -e $SPECS_DIR/detectnet_v2_retrain_resnet18_kitti_qat.txt \

-m $USER_EXPERIMENT_DIR/experiment_dir_final/resnet18_detector_qat.trt.int8 \

-f tensorrtE. Inference using QAT engine

Run inference and visualize detections on test images, using the exported TensorRT engine from Section C.

!tao detectnet_v2 inference -e $SPECS_DIR/detectnet_v2_inference_kitti_etlt_qat.txt \

-o $USER_EXPERIMENT_DIR/tlt_infer_testing_qat \

-i $DATA_DOWNLOAD_DIR/testing/image_2 \

-k $KEY# visualize the first 12 inferenced images.

OUTPUT_PATH = 'tlt_infer_testing_qat/images_annotated' # relative path from $USER_EXPERIMENT_DIR.

COLS = 4 # number of columns in the visualizer grid.

IMAGES = 12 # number of images to visualize.

visualize_images(OUTPUT_PATH, num_cols=COLS, num_images=IMAGES)