BART for PyTorch

This resource is using open-source code maintained in github (see the quick-start-guide section) and available for download from NGC

BART is a denoising autoencoder for pretraining sequence-to-sequence models. According to the paper, the model uses a standard seq2seq/machine translation architecture with a bidirectional encoder (like BERT) and a left-to-right decoder (like GPT).

BART is particularly effective when fine tuned for text generation but also works well for comprehension tasks. It matches the performance of RoBERTa with comparable training resources on GLUE and SQuAD, achieves new state-of-the-art results on a range of abstractive dialogue, question answering, and summarization tasks, with gains of up to 6 ROUGE.

Other publicly available implementations of BART include:

This model is trained with mixed precision using Tensor Cores on Volta, Turing, and the NVIDIA Ampere GPU architectures. Therefore, researchers can get results 1.4 to 2.1x faster than training without Tensor Cores, while experiencing the benefits of mixed precision training. This model is tested against each NGC monthly container release to ensure consistent accuracy and performance over time.

Model architecture

BART uses a standard sequence-to-sequence Transformer architecture with GeLU activations. The base model consists of 6 layers in encoder and decoder, whereas large consists of 12. The architecture has roughly 10% more parameters than BERT.

BART is trained by corrupting documents and then optimizing the reconstruction loss. The pretraining task involves randomly shuffling the order of the original sentences and a novel in-filling scheme, where spans of text are replaced with a single mask token.

Default configuration

BART model is similar to BERT with the following differences: Decoder layers additionally perform cross-attention over final hidden encoder layer BART removes the additional feed-forward network before word prediction that BERT uses

Inference is done by default with beam search 4 for CNN-DM dataset and 6 for XSum Dataset.

Feature support matrix

The following features are supported by this model:

| Feature | BART |

|---|---|

| PyTorch AMP | Yes |

| PyTorch DDP | Yes |

| LAMB | Yes |

| Multi-node | Yes |

| LDDL | Yes |

| Pre-LN | Yes |

Features

APEX is a PyTorch extension with NVIDIA-maintained utilities to streamline mixed precision and distributed training, whereas AMP is an abbreviation used for automatic mixed precision training.

DDP stands for DistributedDataParallel and is used for multi-GPU training.

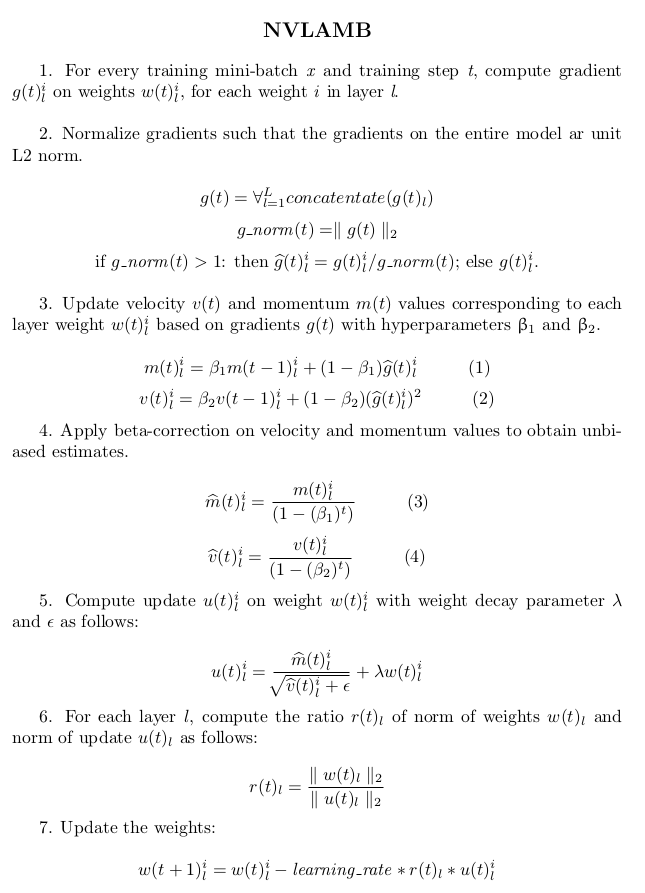

LAMB stands for Layerwise Adaptive Moments based optimizer, is a large batch optimization technique that helps accelerate training of deep neural networks using large minibatches. It allows using a global batch size of 65536 and 32768 on sequence lengths 128 and 512 respectively, compared to a batch size of 256 for Adam. The optimized implementation accumulates 1024 gradient batches in phase 1 and 4096 steps in phase 2 before updating weights once. This results in a 15% training speedup. On multi-node systems, LAMB allows scaling up to 1024 GPUs resulting in training speedups of up to 72x in comparison to Adam. Adam has limitations on the learning rate that can be used since it is applied globally on all parameters whereas LAMB follows a layerwise learning rate strategy.

NVLAMB adds the necessary tweaks to LAMB version 1, to ensure correct convergence. The algorithm is as follows:

In this PyTorch BART example, we used global batch size of 64000 and 30720 on sequence lengths 128 and 512 respectively, compared to a batch size of 8000 and sequence lengths 512 on RoBERTa which Facebook used for BART. We only trained with 44% total number of tokens compared to Facebook's BART. It can get 2.7x training speedup and achieve similar accuracy.

LDDL is a library that enables scalable data preprocessing and loading. LDDL is used by this PyTorch BART example.

Pre-LN is an transformer architecture, which layer normalization is put inside the residual blocks. In our experiments, For Pre-LN transformer, the loss decays faster and it makes training more stable without gradient exploding or vanishing .

Mixed precision training

Mixed precision is the combined use of different numerical precisions in a computational method. Mixed precision training offers significant computational speedup by performing operations in half-precision format while storing minimal information in single-precision to retain as much information as possible in critical parts of the network. Since the introduction of Tensor Cores in Volta, and following with both the Turing and Ampere architectures, significant training speedups are experienced by switching to mixed precision -- up to 3x overall speedup on the most arithmetically intense model architectures. Using mixed precision training previously required two steps:

- Porting the model to use the FP16 data type where appropriate.

- Adding loss scaling to preserve small gradient values.

For information about:

- How to train using mixed precision, see the Mixed Precision Training paper and Training With Mixed Precision documentation.

- Techniques used for mixed precision training, see the Mixed-Precision Training of Deep Neural Networks blog.

- APEX tools for mixed precision training, see the NVIDIA Apex: Tools for Easy Mixed-Precision Training in PyTorch.

Enabling mixed precision

In this repository, mixed precision is enabled in PyTorch by using the Automatic Mixed Precision (AMP) autocast torch.cuda.amp.autocast which casts variables to half-precision upon retrieval, while storing variables in single-precision format. Furthermore, to preserve small gradient magnitudes in backpropagation, a gradient scaling step must be included.

For an in-depth walk through on AMP, check out sample usage here.

TF32

TensorFloat-32 (TF32) is the new math mode in NVIDIA A100 GPUs for handling the matrix math also called tensor operations. TF32 running on Tensor Cores in A100 GPUs can provide up to 10x speedups compared to single-precision floating-point math (FP32) on Volta GPUs.

TF32 Tensor Cores can speed up networks using FP32, typically with no loss of accuracy. It is more robust than FP16 for models which require high dynamic range for weights or activations.

For more information, refer to the TensorFloat-32 in the A100 GPU Accelerates AI Training, HPC up to 20x blog post.

TF32 is supported in the NVIDIA Ampere GPU architecture and is enabled by default.

Glossary

Fine-tuning Training an already pretrained model further using a task specific dataset for subject-specific refinements, by adding task-specific layers on top if required.

Language Model Assigns a probability distribution over a sequence of words. Given a sequence of words, it assigns a probability to the whole sequence.

Pre-training Training a model on vast amounts of data on the same (or different) task to build general understandings.

Transformer The paper Attention Is All You Need introduces a novel architecture called Transformer that uses an attention mechanism and transforms one sequence into another.